In an extraordinary fusion of music and neuroscience, researchers have achieved a groundbreaking feat: reconstructing Pink Floyd’s iconic “Another Brick in the Wall, Part 1” directly from recordings of brain activity. This remarkable study, conducted at the University of California, Berkeley, marks a significant step forward in our understanding of how the brain processes music and opens exciting new possibilities for brain-computer interfaces, particularly for individuals with communication impairments.

The experiment, initially carried out at Albany Medical Center, involved neuroscientists monitoring patients undergoing preparations for epilepsy surgery. Electrodes were meticulously placed on the patients’ brains to capture neural activity while they listened to music. Among the chosen pieces was the instantly recognizable “Another Brick in the Wall, Part 1” by Pink Floyd. The objective was ambitious: to decipher the intricate electrical signals corresponding to different musical attributes – melody, rhythm, harmony, and lyrics – and ultimately, to reconstruct the song as perceived by the listener.

After more than a decade of meticulous data analysis encompassing 29 patients, the results were unequivocally positive. The research team successfully reconstructed a recognizable rendition of the Pink Floyd song. The iconic phrase, “All in all it was just a brick in the wall,” emerged clearly in the reconstruction, retaining its rhythmic structure, while the lyrics, though somewhat less distinct, remained decipherable. This achievement represents the first instance of researchers successfully reconstructing a recognizable song from neural recordings, highlighting the brain’s profound encoding of musical elements.

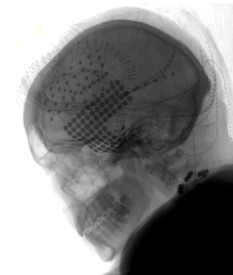

B&W X-ray of skull, showing electrodes on brain

B&W X-ray of skull, showing electrodes on brain

The placement of electrodes on the brain’s surface is visualized in an X-ray, illustrating the technology used to record neural activity during the Pink Floyd “Brick in the Wall” song experiment.

This groundbreaking reconstruction extends beyond mere song identification; it demonstrates the potential to capture and translate brain waves into the musical nuances of speech, known as prosody. Prosody encompasses rhythm, stress, accent, and intonation – elements crucial for conveying meaning and emotion that go beyond the literal words themselves. While current brain-machine interfaces excel at decoding words, they often lack the expressive musicality inherent in natural human speech.

It is important to note that these intracranial electroencephalography (iEEG) recordings are obtained directly from the brain’s surface, in close proximity to auditory processing centers. This invasive nature means mind-reading musical preferences from afar remains firmly in the realm of science fiction. However, the implications for individuals facing communication challenges are profound. For those who have lost the ability to speak due to conditions like stroke or paralysis, this technology offers a potential pathway to reproduce the expressive, musical qualities of speech currently absent in robotic-sounding communication devices.

“It’s a wonderful result,” stated Robert Knight, a distinguished neurologist and UC Berkeley professor of psychology at the Helen Wills Neuroscience Institute, who spearheaded the study alongside postdoctoral fellow Ludovic Bellier. “Music, for me, is deeply intertwined with prosody and emotional content. As brain-machine interfaces advance, this research provides a means to incorporate musicality into future brain implants for individuals with conditions like ALS or other neurological disorders affecting speech. It unlocks the potential to decode not just the linguistic content but also the prosodic content – the emotional affect – of speech. I believe we’ve truly begun to crack this code.”

Future advancements in brain recording technologies hold the promise of less invasive methods. While current scalp EEG, which measures brain activity through electrodes placed on the scalp, can detect individual letters, the process is slow and cumbersome, taking approximately 20 seconds per letter. This makes communication laborious and impractical.

“Noninvasive techniques are not yet sufficiently accurate,” Bellier cautioned. “We hope that in the future, for the benefit of patients, we can achieve high-quality signal readings from deeper brain regions using only electrodes placed externally on the skull. However, we are still far from reaching that point.”

Bellier, Knight, and their colleagues published their findings in the esteemed journal PLOS Biology, aptly noting that their work contributes “another brick in the wall of our understanding of music processing in the human brain,” referencing the very Pink Floyd song that underpinned their research.

Decoding the Musical Mind: Beyond Words to Emotion

Current brain-machine interface technology, while capable of decoding words for communication, often produces speech that lacks natural expressiveness, reminiscent of the synthesized voice of the late Stephen Hawking.

A visual representation comparing the original Pink Floyd “Another Brick in the Wall” song segment with the reconstructed version derived from brain activity recordings.

“Currently, the technology is more akin to a keyboard for the mind,” Bellier explained. “It cannot directly read thoughts. It requires conscious effort, like pressing keys on a keyboard, and the resulting voice tends to be robotic, lacking expressive freedom.”

Bellier’s personal background as a musician – with experience in drums, classical guitar, piano, and even a heavy metal band – fueled his enthusiasm for exploring the musicality of speech. When Professor Knight invited him to delve into this area, Bellier’s response was an immediate and resounding, “You bet I was excited!”

In 2012, Knight, along with postdoctoral fellow Brian Pasley and other collaborators, achieved a landmark by becoming the first to reconstruct spoken words solely from brain activity recordings. This earlier success paved the way for further exploration into the complexities of brain-based communication.

Building upon this foundation, researchers like Eddie Chang, a neurosurgeon at UC San Francisco and a senior co-author of the 2012 study, have made significant strides. Chang and his team have successfully recorded signals from the motor cortex – the brain region controlling movements of the jaw, lips, and tongue – to reconstruct the intended speech of a paralyzed patient, displaying the decoded words on a computer screen. This 2021 breakthrough utilized artificial intelligence to interpret brain recordings from a patient attempting to articulate sentences from a vocabulary of 50 words.

While Chang’s motor cortex-based approach has proven effective, the new research focusing on Pink Floyd’s “Brick in the Wall” song suggests that decoding signals from the auditory cortex offers unique advantages. The auditory cortex, responsible for processing all aspects of sound, can capture essential elements of speech beyond just word content, encompassing the crucial prosodic features that enrich human communication.

“Decoding from the auditory cortices, which are closer to the acoustic properties of sounds, as opposed to the motor cortex, which is linked to the physical movements of speech production, is incredibly promising,” Bellier elaborated. “It has the potential to add a richer, more nuanced quality to decoded speech.”

For this latest study, Bellier re-analyzed brain recordings collected between 2008 and 2015 as patients listened to the approximately 3-minute segment of Pink Floyd’s “Another Brick in the Wall, Part 1,” from the 1979 album The Wall. His aim was to move beyond simply identifying musical pieces or genres, as in previous studies, and to reconstruct actual musical phrases using regression-based decoding models.

Bellier emphasized that this study, employing artificial intelligence to decode and then re-encode brain activity into music, is not merely a “black box” approach to speech synthesis. The researchers also gained valuable insights into brain regions involved in processing rhythm, such as the detection of a rhythmic guitar strumming. They discovered that specific areas within the auditory cortex, particularly in the superior temporal gyrus located behind and above the ear, respond to the onset of vocals or synthesizers, while other areas react to sustained vocal performances.

Furthermore, the study corroborated the long-held understanding that the right hemisphere of the brain is more attuned to music than the left. “Language is predominantly processed in the left brain, whereas music is more diffusely processed, with a bias towards the right hemisphere,” Knight noted.

“It was not initially clear whether this hemispheric specialization would extend to musical stimuli,” Bellier added. “Our findings confirm that this is not solely a speech-specific phenomenon but rather a fundamental characteristic of the auditory system and its processing of both speech and music.”

Professor Knight is now embarking on new research to investigate the brain circuits that enable some individuals with aphasia, resulting from stroke or brain injury, to communicate through singing even when they struggle to find spoken words. This highlights the deep and multifaceted connection between music, language, and the human brain, further underscored by the successful reconstruction of Pink Floyd’s “Another Brick in the Wall” from neural activity.

The research team also included Helen Wills Neuroscience Institute postdoctoral fellows Anaïs Llorens and Déborah Marciano, Aysegul Gunduz from the University of Florida, and Gerwin Schalk and Peter Brunner from Albany Medical College in New York and Washington University, who were instrumental in capturing the brain recordings. The project received funding from the National Institutes of Health and the Brain Initiative, a collaborative endeavor between federal and private entities dedicated to accelerating the development of innovative neurotechnologies.

RELATED INFORMATION